Workshop Website Link

Rationale

Robotic Vision is an integrative science, bringing together different technologies, algorithms, and science for vision systems and visual analysis and making them work on a real robot in real time. The Robotic Vision Summer School provides students with an experience of the whole robotic vision system development life-cycle through a structured workshop activity involving programming a small differential drive robot to undertaking a simple but challenging visual identification task.

Teams

The workshop will be done in teams of three (if we have odd numbers, there may be one team of 2). To improve the networking aspect of the workshop we will have a team forming process on the Sunday night to try and split everyone up so you are not working with your colleagues. However, the goal of the workshop is collaborative as well as competitive and you should feel comfortable sharing ideas and even bits of code between teams during the week.

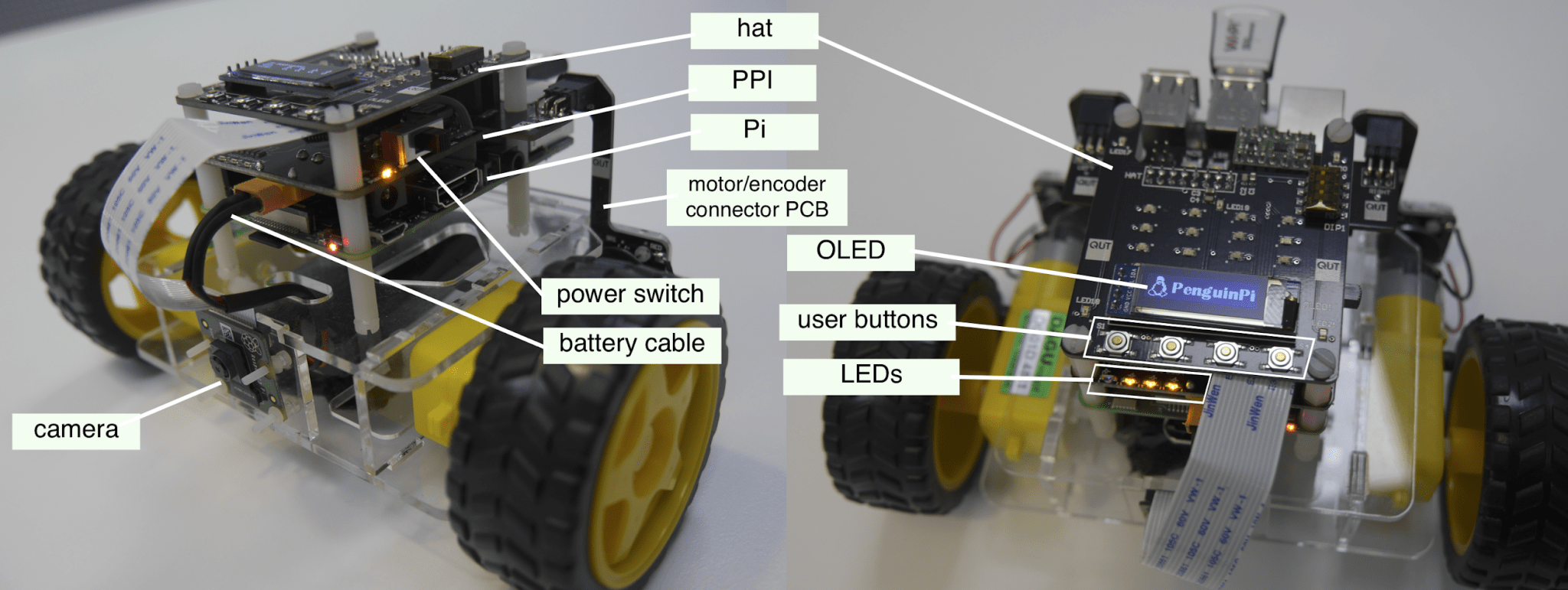

Robot

The PiBot is a differential drive two wheeled robot with equipped with a camera distributed by a QUT spinoff and designed as a teaching aid. The robot has

- A Raspberry Pi 3B computer with a color camera

- an i/o board with embedded processor that interfaces with motors and provides a simple UI (20×4 OLED display, pushbuttons and other LEDs)

- ability to run code onboard written in Python or C++

The programming will be undertaken in Python in an Anaconda environment on one of the machines for the team. One of your team will need to install the anaconda environment on their laptop and then follow the provided instructions to install the Python environment for the workshop. Alternatively, we have a number of laptop computers available to loan for the week if you do not have anaconda or don’t wish to install this on your own machine.

Task

The task is to find the animals in a terrain and report their position and orientation accurately to the team – see Figure 2 below. The task is collaborative. There will be three teams robots in the terrain for each trial. At the end of the trial the three teams will have 15 minutes to collate and fuse their data to provide the best estimates of the animals positions. Each team in a trial will get the combined score for the group of three teams. There will be multiple trials with different teams. Thus, to win you need your co-teams to do well, but somehow save a little bit of extra advantage for yourself to stand out on average.

The terrain is an area 4m by 3m large in the workshop room. One side will be the room wall and one side will be plain pin boards, simplifying the visual background in images and improving visual identification for images taken in these directions. The other two sides will be open and will have of peoples feet, bags, tables, chair legs, movement, etc, providing complex visual background for images. The terrain will have a number (around 15) landmark beacons in unknown positions. These beacons are 10cm by 10cm blocks of wood with ARUCO markers. Code is provided that identifies each ARUCO marker uniquely and provides a bearing and distance for each ARUCO marker visible in a given image. This data is used in a Simultaneous Localisation and Mapping (SLAM) algorithm to build a map and localise the robot. A base level SLAM algorithm will be provided for this task as one of the examples in the workshop tutorials, however, there will be a lot of latitude for improvement and tuning of the algorithm during the workshop sessions.

The robot will be navigated through the terrain by remote control by a pilot who will be sitting behind a screen and will not be able to see the terrain. The pilot will operate the user interface on the team laptop for the robot and will only be able to see graphics that the team has developed during the workshop. The minimum graphics needed are provided as starting code, a visual feed from the camera and a set of navigation and image controls. Teams may choose to add additional graphics such as real time visualisation of map and localisation uncertainty, estimates of confidence in animal identification, classification, and localisation as well as additional semi-autonomous navigation capability as can be imagined.

The second team member is termed a wrangler. This team members is not allowed to view the laptop output, but will stand around the terrain and can view the robot live during the trial. They may shout instructions to the pilot, where to move, where to take images, etc. They will also be allowed certain liberties to touch the robots should they become stuck or leave the terrain.

The third team members can be allocated either as a second pilot or second wrangler as determined by the team.

The position of the landmarks, the starting position of the robots, the positions of the animals, will all be determined by the invigilators for each trial.

Identifying Animals

The core task is to identify and locate the animals in the scene. This task must be done semi-autonomously.

The identification and classification of an animal in an image must be done solely from visual data. The pilot can initiate the capture of a classification image at any time (this could be automated if the team thinks it is a good idea). An automated system must be used to identify and classify animals in the scene and their relative direction from the robot. A deep neural network will be provided for this task as one of the examples in the workshop tutorials. This basic network can be retrained during the week to provide better classification performance or better pose estimation of the animals. The data of classification and pose estimation will be saved in a standardised data format that will be provided.

It is not legal to hand label animal, or separately enter, information relating to animal identification or location from images. The actual initiation of taking an image is pilot controlled. Thus, pointing the robot directly at an animal before taking an image is legal labelling that image as one for animal detection (there will be many more images used for SLAM) is legal. However, once the image is taken, all identification, classification and pose estimation of the animal must be automated.

Evaluation

The teams are evaluated on the accuracy of their estimates of the animals.

For each image taken with an animal identified there is a standard data format provided that will save the location (and uncertainty) of the robot relative to an inertial reference frame, and the relative position of the animal. This data will be saved into a file which is then available following the trial and the three teams will combine data into a single fused data set that can be used for evaluation of the final positions of the animals. Each team will provide a map estimate with uncertainty of the beacons and these maps will be fused to provide a single map. Each identification of an animal will be an independent statistical measurement of the animal position with uncertainty in the location of the robot and the relative pose of the animal. These estimates can be fused to provide a single best estimate of the animal position. The mathematics of the data fusion problem will be presented during workshops and detailed notes will be provided. The code to fuse maps, and then fuse animal pose estimates must be developed individually by each group.

The final score awarded will be based on a least squares cost across the three animal classes that must be identified.